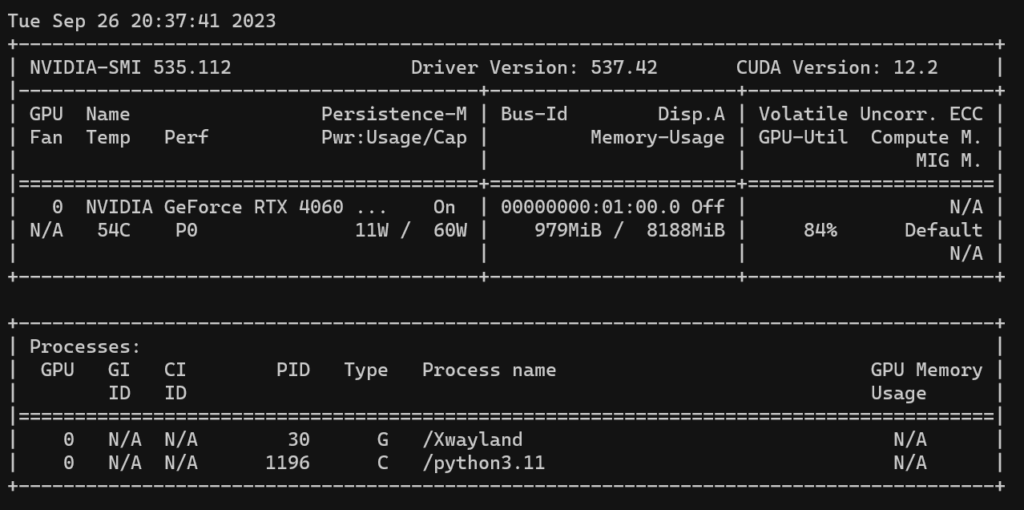

By default Tensorflow preallocates GPU memory eagerly. Background for that is that it wants to prevent memory fragmentation. The amount of memory it allocates is around 80% of the memory available. Although running a rather small model with less than 45k of parameters, the monitoring tool nvidia-smi shows this:

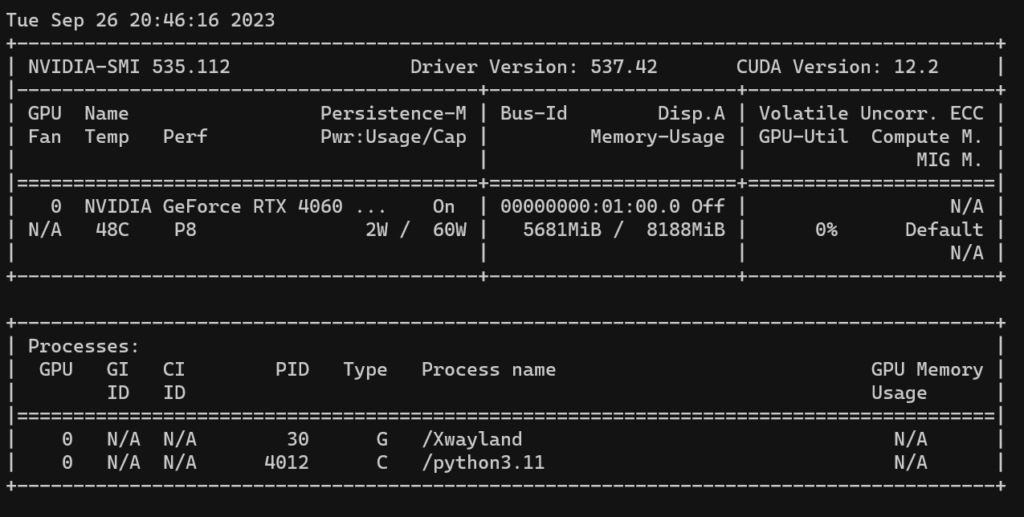

The screenshot shows roughly 5.6GB of the available 8GBs are allocated. The second process (Xwayland) only had allocated a couple of megabytes before (and may be neglected).

Adding

gpus = tf.config.list_physical_devices('GPU')

if gpus:

for gpu in gpus:

tf.config.experimental.set_memory_growth(gpu,True)asks Tensorflow to disable eager memory allocation on the GPU and only allocate that much amount of GPU memory, which is necessary for the task to perform. On the monitoring tool, this then may look something like this: